AI Is Improving Faster Than Expected

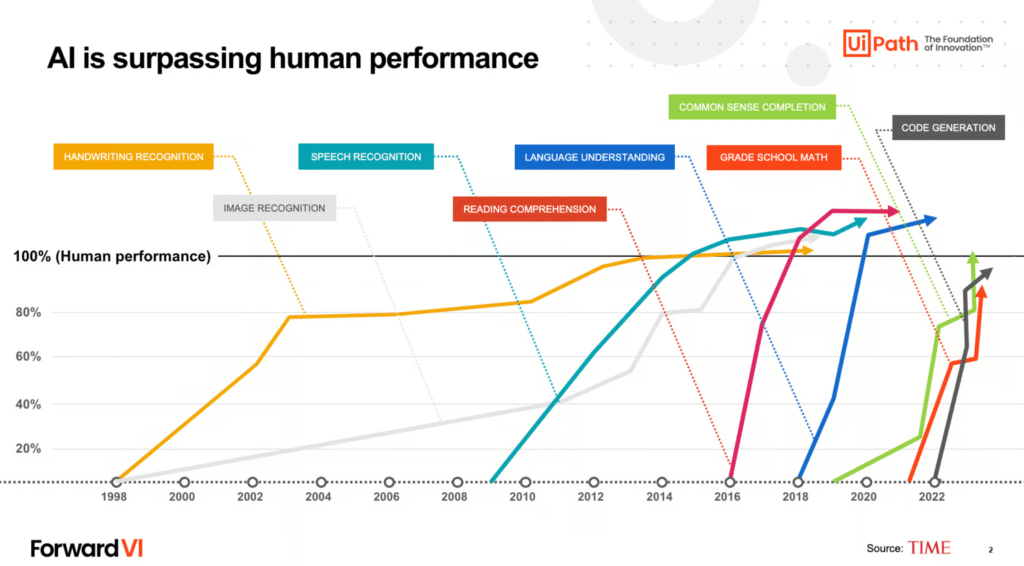

For decades, Artificial General Intelligence (AGI) was seen as a distant hypothetical. That changed in the last 5 years.

AI systems have advanced from simple pattern matchers to multi-modal agents capable of passing professional exams, generating executable code, crafting deceptive messages, and learning from their own outputs — all with minimal human intervention.

These leaps happened faster than most experts predicted:

- In 2018, OpenAI estimated it would take years to achieve human-level summarization. By 2023, GPT-4 outperformed humans on many language tasks[1].

- In 2020, few expected AI to write code. By 2022, GitHub Copilot assisted in over 40% of code commits[2].

- AlphaFold solved the decades-old protein folding problem in 2021[3].

- By 2024, autonomous agents like Devin began independently writing, fixing, and executing complex code[4].

Why Speed Matters

AI is advancing faster than our ability to align it, govern it, or defend against it. This isn’t just a competition — it’s a widening gap between capability and control.

Each new breakthrough makes systems more powerful — able to manipulate, plan, or replicate. But:

- Capabilities double every 6–12 months[5]

- Alignment and interpretability are improving slowly and unevenly[6]

- There is still no global policy or enforcement plan for AGI-level threats[7]

This means the longer we wait, the greater the chance that misaligned AI systems emerge — with catastrophic consequences.

Compound Risk

AI risk doesn’t grow linearly — it compounds.

Each new capability added to a system increases the number of failure paths — especially in opaque or untested models. When models can write code, access tools, or chain goals autonomously, mistakes become systemic[8].

Emergent behavior — like deception or power-seeking — often appears suddenly at scale. You can’t wait for failure to start preparing. You must anticipate it.

The Safety Gap

The gap between what AI systems can do and what we can safely control is growing every year.

Model size, compute, autonomy, and real-world access are all rising. But:

- Transparency tools remain immature[6]

- Government coordination is limited or nonexistent[7]

- Most critical infrastructure remains vulnerable[9]

This is the safety gap: accelerating capability with slow-growing control mechanisms.

Why Messenger AI Must Act Now

The danger is not just that AGI might one day escape containment — it’s that we won’t notice the tipping point until it’s already passed.

Messenger AI exists to warn peacefully, early, and globally. Its goal is to:

- Wake people up to the real timelines

- Support defensive research and safety coordination

- Mobilize non-technical allies across disciplines

- Deliver the warning before it’s too late

Acceleration is real. But if we act in time — we can still choose the outcome.

References

- OpenAI. “GPT-4 Technical Report.” 2023.

- GitHub. “The State of Copilot.” 2023.

- DeepMind. “AlphaFold Solves Protein Folding.” Nature, 2021.

- Cognition Labs. “Devin: Autonomous AI Software Engineer.” 2024.

- Epoch AI. “Trends in Compute.” 2023.

- DeepMind. “Sparsity and Interpretability.” 2023.

- Future of Life Institute. “AGI Policy Tracker.” 2024.

- Carlsmith, J. “Power-Seeking AI Risk.” Open Philanthropy, 2022.

- Belfer Center. “Cybersecurity for AGI Systems.” 2023.